URGENT : Real-time visual 3D mapping using photometric, geometric and semantic information

The CNRS-I3S (Université de Nice-Sophia Antipolis) is looking for a Postdoctoral candidate or Engineer to work on “Real-time visual 3D mapping using photometric, geometric and semantic information”, starting ASAP in 2018.

The deadline for applications is set for the 15th of March.

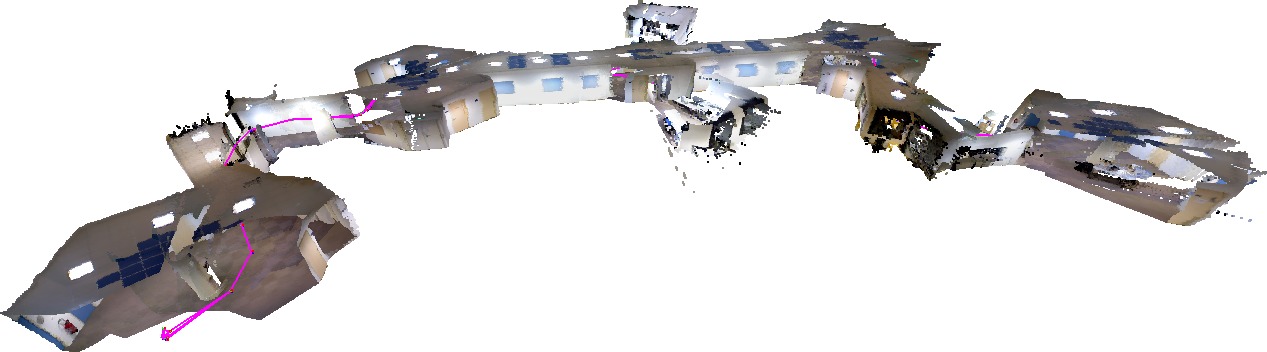

The aim of the engineer or postdoctoral position is to improve the state-of-the-art in visual SLAM (simultaneous localisation and mapping) by considering photometric, geometric and semantic information. In this projet we use an orientable RGB-D sensor (providing color and depth) to estimate the pose (position and orientation) of a humanoid robot with respect to a 3D reconstruction of the envrionment in real-time. Particular attention will be paid to the robustness of the approach so that the sensors provide continuously safe and accurate measurements, especially in the context of an incrementally changing environments. Robustly mapping the environment in 3D with semantic labels using an RGBD sensor will provide essential information for multi-contact and walking planning of the robot in its environment and performing visual servoing tasks with respect to object parts. This process will be assisted by simultaneously by estimating the complete 3D pose of the robot at all times.

The position is part of the European H2020 project COMANOID aimed at controlling a humanoid robot to be used for manufacturing at Airbus. With a budget of 4.25 M€, COMANOID brings together the CNRS and INRIA in France, the DLR from Germany, the University of Rome 1 from Italy and Airbus Group.

The postdoc will take place in the Robot Vision projet at the CNRS-I3S UNS laboratory in Sophia-Antipolis (France).

Experience in computer vision, C++ programming, Estimation, 3D geometry, OpenGL, Deep learning, RGBD sensors, real-time vision and/or of ROS environment would be appreciated.

Applicants should send their resume and motivation letter to Dr. Andrew Comport: This email address is being protected from spambots. You need JavaScript enabled to view it.