Research

Announcements

NEW : Check our internship+PhD proposals related with the upcoming ANR NAMED project -- we'll need a postdoc too!

Feb 1, 2024: Start of our project ANR PRCI / SNSF project NAMED (Neuromorphic Attention Models for Event Data) with SCALab and ETHZ.

Towards a neuro-inspired machine learning

The vast majority of modern computer vision approaches heavily rely on machine learning, including deep learning. For almost two decades, deep convolutional artificial neural networks have become the reference method for many machine learning and vision tasks: classification, object or action detection, faces alignment, etc. The availability of both very large amounts of annotated data and huge computational resources has led to remarkable progress in this approach, however this success comes with a significant human cost for manual annotation and huge energy consumption for the training of deep CNNs.

Standing out the main stream of deep learning widely used in machine learning, I am interested in a particular type of neural network: the Spiking Neural Networks (SNN), close to the biological model, in which neurons emit outgoing pulses (action potentials or spikes) asynchronously, depending on the incoming stimulations, also asynchronous. This type of neural network has the advantage of implementing a mostly unsupervised learning (which limits the need for manually annotated data) thanks to the Spike-Timing Dependent Plasticity (STDP) bio-inspired learning rules.

The STDP rule updates synaptic weights based on the cause-effect relationships observed between incoming and outgoing pulses. The purpose of this rule, inspired by Hebb's law, is the strengthening of the incoming connections that are the cause of the outgoing impulses.

The ultimate goal is to use these models of neural networks to solve modern machine learning and computer vision tasks by bypassing two of the main pitfalls of current methods. The expected impact is a paradigm change in machine learning and computer vision, with respect to data-hungry and power-hungry popular methods. SNN show many interesting features for this paradigm change, such a their unsupervised training with STDP rules, and their implementability on ultra-low power neuromorphic hardware. And yet, a number of challenges lie ahead before they become a realistic alternative for facing the ever-growing demand in machine learning.

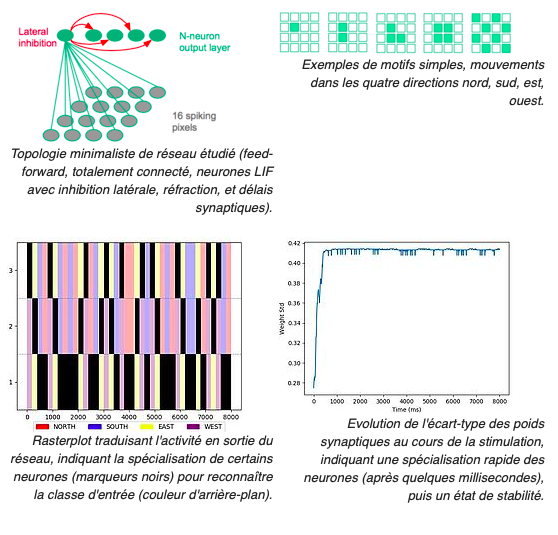

Unsupervised learning of temporal patterns

I am interested in the characterization of motion using Spiking Neural Networks (SNN). As a part of the thesis of Mr. Veis Oudjail (since October 2018), our first work focused unsupervised detection of the direction of movement of a pattern.The first results obtained with SNN have demonstrated the ability of these networks to recognize and characterize the motions of simple patterns composed of a few pixels (points, lines, angles) in an image. We consider video sequences encoded in Address-Event Representation (AER) format, like that produced by Dynamic Vision Sensor type sensors. These sensors differ from conventional video sensors because instead of producing RGB or grey-level image sequences sampled at fixed rate, they encoding binary positive or negative brightness variations, independently for each pixel. Thus, each variation of a pixel at a given instant results in a corresponding event transmitted asynchronously, with a high sampling frequency. Moreover, this encoding eliminates much of the redundancy in motion information - at the cost of loss of static texture information (spatial contrast). Thus, these sensors offer a form of native dynamic motion representation.

The asynchronous nature of SNN makes it a model that can naturally handle this type of data: the events generated by the sensor can be interpreted as spikes to feed the input layer of the network.

Optical flow characterization typically relies on complex processing, operators, and calculations related to the nature of conventional RGB physical sensors that produce image sequences. The bio-inspired porting of conventional processing for vision requires rethinking how to process visual information, working directly with temporal contrast and motion. This suggests more efficient processing.

Beyond efficiency in terms of calculations, it is also expected to have better quality information, without any noise induced by the usual capturing and preprocessing steps (demosaicing, compression, etc.) The objective here is to propose a bio-inspired processing chain, from the sensor to the processing of visual information.

NAMED project (ANR PRCI with Swiss NSF, 2024-2028)

Neuromorphic Attention Models for Event Data

The humain perception of a complex visual scene requires a cognitive process of visual attention to sequentialy direct the gaze towards a visual region of interest to acquire relevant information selectively through foveal vision, that allows maximal acuity and contrast sensitivity in a small region around the gaze position — whereas peripheral vision allows for a large field of view, albeit with lower resolution, contrast sensitivity. This cognitive process mixes bottom-up attention driven by saliency and top-down attention drivent by the demands of the task (recognition, counting, tracking, etc.) While numerous work have investigated visual attention in standard RGB images, it has barely been exploited for the recently developed event sensors (DVS). Inspired by human perception, the interdisciplinary NAMED project aims at designing neuromorphic event-based vision systems for embedded platforms such as autonomous vehicles and robots. A first stage will investigate and develop new bottom-up and top-down visual attention models for event sensors, in order to focus processing on relevant parts of the scene. This stage will require to understand what drives attention in event data. A second stage will design and implement a hybrid digital- neuromorphic attentive system for ultra-fast, low-latency, and energy-efficient embedded vision. This stage will require to set up a dual vision system (foveal RGB sensor and parafoveal DVS), to design Spiking and Deep Neural Networks, and to exploit a novel system-on-chip developed at ETH Zürich. A last stage will validate and demonstrate the results by applying the robotic operational platform to real-life dynamic scenarios such as autonomous vehicle navigation, ultra-fast object avoidance and target tracking.Financial support

The financial support of ANR and SNSF is 801 601 € for 48 months (February 2024-January 2028).Consortium

| Université Côte d'Azur | France |

| Université de Lille | France |

| ETH Zürich | Switzerland |

Coordinator

Jean Martinet, Université Côte d'AzurSee also https://webcms.i3s.unice.fr/named/

APROVIS3D european project (CHIST-ERA, 2020-2023)

Analog PROcessing of bioinspired Vision Sensors for 3D reconstruction

APROVIS3D project targets analog computing for artificial intelligence in the form of Spiking Neural Networks (SNNs) on a mixed analog and digital architecture. The project includes including field programmable analog array (FPAA) and SpiNNaker applied to a stereopsis system dedicated to coastal surveillance using an aerial robot. Computer vision systems widely rely on artificial intelligence and especially neural network based machine learning, which recently gained huge visibility. The training stage for deep convolutional neural networks is both time and energy consuming. In contrast, the human brain has the ability to perform visual tasks with unrivalled computational and energy efficiency. It is believed that one major factor of this efficiency is the fact that information is vastly represented by short pulses (spikes) at analog – not discrete – times. However, computer vision algorithms using such representation still lack in practice, and its high potential is largely underexploited. Inspired from biology, the project addresses the scientific question of developing a low-power, end-to-end analog sensing and processing architecture of 3D visual scenes, running on analog devices, without a central clock and aims to validate them in real-life situations. More specifically, the project will develop new paradigms for biologically inspired vision, from sensing to processing, in order to help machines such as Unmanned Autonomous Vehicles (UAV), autonomous vehicles, or robots gain high-level understanding from visual scenes. The ambitious long-term vision of the project is to develop the next generation AI paradigm that will eventually compete with deep learning. We believe that neuromorphic computing, mainly studied in EU countries, will be a key technology in the next decade. It is therefore both a scientific and strategic challenge for the EU to foster this technological breakthrough. The consortium from four EU countries offers a unique combination of expertise that the project requires. SNNs specialists from various fields, such as visual sensors (IMSE, Spain), neural network architecture and computer vision (Uni. of Lille, France) and computational neuroscience (INT, France) will team up with robotics and automatic control specialists (NTUA, Greece), and low power integrated systems designers (ETHZ, Switzerland) to help geoinformatics researchers (UNIWA, Greece) build a demonstrator UAV for coastal surveillance (TRL5). Adding up to the shared interest regarding analog based computing and computer vision, all team members have a lot to offer given their different and complementary points of view and expertise. Key challenges of this project will be end-to-end analog system design (from sensing to AI-based control of the UAV and 3D coastal volumetric reconstruction), energy efficiency, and practical usability in real conditions. We aim to show that such a bioinspired analog design will bring large benefits in terms of power efficiency, adaptability and efficiency needed to make coastal surveillance with UAVs practical and more efficient than digital approaches.Financial support

The financial support of CHIST-ERA is 867 560 € for 36 month (April 2020-March 2023).Consortium

| Université Côte d'Azur | France |

| Université de Lille | France |

| Institut de Neurosciences de la Timone | France |

| Instituto de Microelectrónica de Sevilla IMSE-CNM | Spain |

| University of West Attica | Greece |

| National Technical University of Athens | Greece |

| ETH Zürich | Switzerland |

Coordinator

Jean Martinet, Université Côte d'AzurSee also http://www.chistera.eu/projects/aprovis3d

Current students

PhD students

- M. Antoine Grimaldi (Oct. 2020 – now, 50% with Dr. Laurent Perrinet, INT). Ultra-fast vision using Spiking Neural Networks. Institut des Neurosciences de la Timone, Aix Marseille Université. APROVIS3D project.

- M. Karim Haroun (March 2022 – now, 33% with Dr. Karim Ben Cheida and Dr. Thibault Allenet, CEA). Visual attention-based dynamic inference for autonomous mobile system perception. CEA funding.

- Ms. Dalia Hareb (Nov. 2022 – now, 50% with Prof. Benoît Miramond, LEAT). Real-time neuromorphic semantic scene segmentation from event data. Université Côte d'Azur. Ministry grant.

- M. Hugo Bulzomi (Nov. 2023 – now, 50% with Dr. Yuta Nakano, IMRA). Low-energy neuromorphic computer vision. i3s-Undisclosed Company. IMRA SAS and ANRT CIFRE Funding.

Amélie's poster at Neuromod inauguration 2021

Amélie's talk at CBMI 2021

MSc students

Currently none.DS4H tutorship students

Alireza Foroutan Torkaman, Louis Vraie (Spring 2024). Analysis of aerial images for the protection of marine ecosystems.Laurent Peraldi (Spring 2024). Event-based stereo matching.

Past students at Université Côte d'Azur

MSc students

Azeed Abdikarim (EIT DIGITAL, Mar-Aug 2023) Extending NeRF with Novel Parametrizations to Create Neural Event Fields.Hugo Bulzomi (Master CS, Mar-Jul 2023, co-supervised with Yuta Nagano, IMRA Europe) Spiking Neural Networks and Neuromorphic Hardware for Autonomous Vehicles.

Anvar Zokhidov (Modeling for Neuronal and Cognitive Systems, Oct 2022, Feb. 2023) Visual saliency detection model applied on event data converted to RGB frames.

Yao Lu (July-Nov 2022). Event lip reading.

Dalia Hareb (ENSIMAG, Mar-Aug 2022). Neuromorphic segmentation of event scenes.

Huiyu Han (MAM Polytech, Mar-Sept 2021). Neuromorphic Stereo Vision with Event Cameras.

Nicolas Arrieta, Nerea Ramon, Ivan Zabrodin (M1-EIT DSC project 2020-2021). Synaptic delays for temporal pattern recognition.

Simone Ballerio (Master CS, Feb-Jul 2020, with Dr. Andrew Comport). Semantic segmentation for event-based scenes. CSI grant.

Rafael Mosca (EIT DIGITAL, Mar-Sept 2020). Spiking neural networks for event-based stereovision. DS4H grant.

Polytech final year project students

Hugo Bulzomi M2 II-IAID and Marcel Schweiker M2 EIT (2022-2023). Event lip reading.Kevin Constantin (2022-2023). Synaptic delays for spatio-temporal pattern recognition.

Yao Lu (2021-2022) Spatiotemporal reduction of the size of event-data.

Raphael Romanet (2021-2022). Neuromorphic hardware comparison: Loihi vs SpiNNaker.

Yilei Li and Yijie Wang (2019-2020). Scene segmentation from event-based videos.

Aloïs Turuani and Morgan Briancon (2019-2020, with Dr. Marc Antonini). Video coding using Spiking Neural Networks.

Khunsa Aslam (2019-2020, Erasmus+). Synaptic delays for Spatio-temporal pattern recognition.

Polytech 4th year project students

Sacha Carnière et Guillaume Ladorme (2019-2020). HistoriyGuessR, a history-oriented game inspired from Geoguessr.Amine Legrifi (2019-2020). Mobile image deblurring using machine learning.

DS4H tutorship students / TER

Yasmine Boudiaf, Laurent Peraldi, Alireza Foroutan Torkaman, Louis Vraie (Fall 2023). Analysis of aerial images for the protection of marine ecosystems.

Antoine Bruneau (Fall 2023). Unsupervised continual learning of discrete patterns.

TER-M1 Spring 22 with Raphael JULIEN, Sami BENYAHIA, Carla GUERRERO, Quentin MERILLEAU: Convolutional SNN on SpiNN-3 board

TER-M1 Spring 22 with Raphael PIETZRAK et Juliette SABATIER: Event dataset generation

Noah Candaele (2022-2023). Stereo matching for event cameras demonstrator.

Hugo Bulzomi (2021-2022). ConvSNN with synaptic delays for temporal pattern recognition.

Thomas Vivancos (2020-2021). Neuromorphic hardware comparison: Human Brain Project SpiNNaker vs Intel Loihi.

Guillaume Cariou (2020-2021). Performance assessment of Intel Neural Compute Stick attached to a Raspberry Pi.

Kevin Alessandro (2019-2020, with Dr. Marc Antonini). Video coding using Spiking Neural Networks.

Past PhD students at University Côte d'Azur

- Ms. Amélie Gruel (Oct. 2020 – now, 50% with Dr. Laurent Perrinet). Spiking neural networks for event-based stereovision. CNRS. APROVIS3D project.

Past PhD students at Université de Lille

M. Veïs Oudjail (Oct. 2018 – Dec. 2022, 100%). Spiking Neural Networks for computer vision. University of Lille. Ministry grant. Now working on own start-up project.

Ms. Jalila Filali (Oct. 2015 – Aug. 4 2020, 50% with Dr. Hajer Baazaoui, ENST, Tunis) Ontology and visual features for image annotation. Ministry grant from Tunisia. Now looking for a postdoc.M. Cagan Arslan (Oct. 2015 – Oct. 28 2020, 50% with Prof Laurent Grisoni) Visual data fusion for human-machine interaction. University of Lille. Ministry grant. Now unsure.

M. Rémi Auguste (Nov. 2010 – July 2014, 75% with Prof Chaabane Djeraba). Dynamic person recognition in audiovisual content. University of Lille. French ANR PERCOL project, ANR/DGA REPERE challenge. Now CEO of weaverize.

Ms. Amel Aissaoui (Sept. 2010 – June 2014, taux 75% with Prof Chaabane Djeraba). Bimodal face recognition by merging visual and depth features. Ministry grant from Algeria. Now back at University of Lille after serving several years as an Assistant Professor at University Of Science And Technology Houari Boumediene in Algeria.

M. Ismail El Sayad (July 2008 – Dec. 2011, taux : 50% with Prof Chaabane Djeraba). A higher-level visual representation for semantic learning in image databases. Ministry grant. Now Lecturer at University of the Fraser Valley after serving several years as an Associate Professor at Lebanese International University.