Direct visual localization and mapping for mobile robots in different lighting conditions

Contact : This email address is being protected from spambots. You need JavaScript enabled to view it. and This email address is being protected from spambots. You need JavaScript enabled to view it.

Keywords : Visual SLAM, Mobile Robotics, Photometry, Illumination, Computer Vision, AI

Student profil and experience

The student will be expected to have knowledge in the fields of computer vison and/or AI, C++ programming, Python, Matlab, mathematics, and optics. English writing skills will be of particular value

Research team:

The Ph.D. student activities will take place in the Robot Vision group (http://www.i3s.unice.fr/robotvision/) at the I3S-CNRS laboratory of the University of Cote d'Azur under the supervision of Dr. Christian Barat (UCA) and Dr. Andrew Comport (CNRS).

|

|

|

Summary

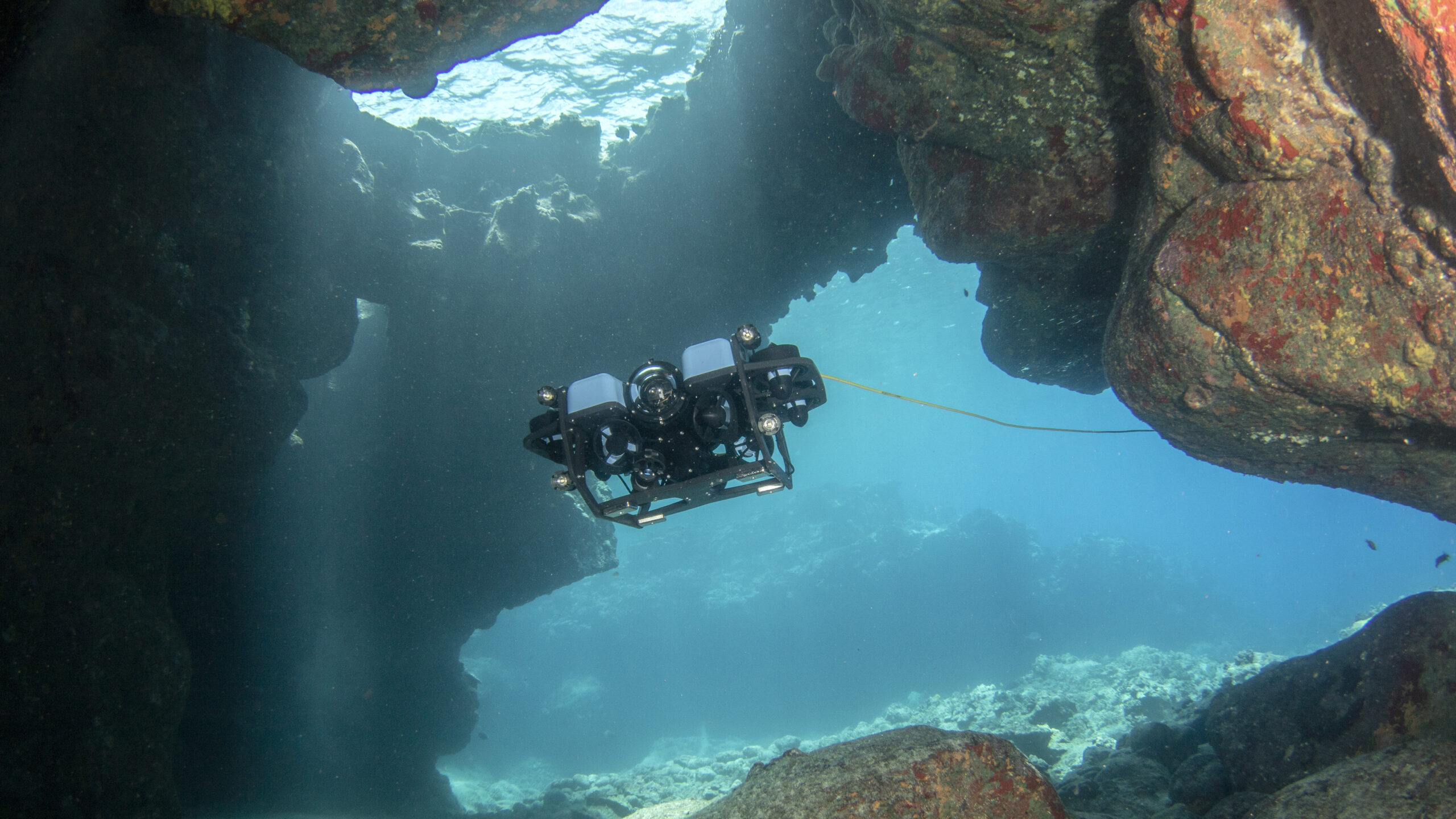

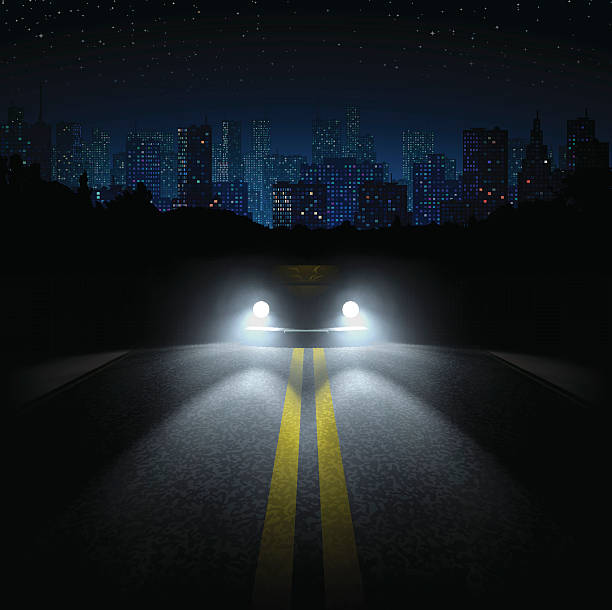

Direct Visual Localisation and Mapping (DVLM) is an essential aspect of autonomous robotics for real-world applications such as underwater exploration, autonomous cars, and drones. DVLM systems enable robots to localize themselves in the environment and create maps for navigation, which is crucial for achieving successful autonomous operations. However, DVLM systems' performance can be severely impacted by varying light conditions, such as day-night, deep-sea, and surface conditions. Therefore, developing a DVLM system that can adapt to these light conditions is crucial to achieving robust and reliable performance. Additionally, comparing the performance of AI-based and physical models can provide insight into the limitations of each approach.

The objective of this research is to investigate the performance of DVLM systems in different light conditions and explore its applicability to underwater mobile robots, autonomous cars, and drones. The study aims to develop a robust DVLM system that can adapt to varying light conditions and compare the performance of AI-based and physical models.

Context

The candidate will undertake his/her PhD within the Robot Vision team of the I3S-CNRS/UCA laboratory (https://www.i3s.unice.fr/robotvision/). This team is specialized in real-time visual localization, AI learning for dense 3D mapping and semantic segmentation, visual SLAM, sensor modeling, robotics and augmented reality. The team collaborates with several international partners (Melbourne University, Brown University, IIT Genes), several industrial partners (Renault Software Labs,Aribus, Thales) and numerous national partners (Univ Toulon, IFREMER, OCA, INRIA) and has a strong history of participating in European projects such as MEMEX and COMANOID and creating startups (PIXMAP).

This project is of great interest to our current industrial and academic partners, particularly in underwater robotics and autonomous driving. There will be opportunities to apply this work to these application domains and build a relationship with these partners.

Method

The study will involve the following steps:

- Literature review: A comprehensive review of existing DVLM techniques and their performance under varying light conditions will be conducted.

- Data collection: Data will be collected using underwater mobile robots, autonomous cars, and drones equipped with cameras in different light conditions. This data will be used to train and evaluate AI-based and physical models.

- DVLM system development: A DVLM system that can adapt to varying light conditions will be developed, and its performance will be compared with existing techniques.

- Performance evaluation: The developed DVLM system's performance will be evaluated using various metrics such as accuracy, efficiency, and robustness in different light conditions.

- Comparison of AI-based and physical models: The performance of the developed AI-based and physical models will be compared, and the limitations of each approach will be identified.

Expected Results

The research will result in a robust DVLM system that can adapt to varying light conditions and provide reliable performance for underwater mobile robots, autonomous cars, and drones. The study will also provide insight into the limitations of AI-based and physical models for DVLM systems. The outcomes of this research can be applied to real-world applications, such as underwater exploration and autonomous vehicles.

Experimental conditions

The team is partner in the national initiative centered around specialized platforms and equipment and in particular for robotics equipment for drones. The team will also make available equipment for mobile robotics (humanoid Nao, vehicles, drones), 3D scanning, sensors (stereo, 10 x mobile phone, 10 x Kinect Azur, RGB-D, IMU, event cameras, ...) , localization (Optitrack) and has a pool for underwater robotics experimentation. The team also has a cluster of high performance computers (8x RTX3090) and access to the national supercomputer Jenzay, specifically for AI training.

Références bibliographiques

Viktor Rudnev, Mohamed Elgharib, William Smith, Lingjie Liu, Vladislav Golyanik, Christian Theobalt, Neural Radiance Fields for Outdoor Scene Relighting. European Conference on Computer Vision (ECCV) 2022

Christian Barat, Andrew I. Comport, Active High Dynamic Range Mapping for Dense Visual SLAM, IEEE/RSJ International Conference on Intelligent Robots and Systems, Sep 2017, Vancouver, Canada.

Maxime Meilland, Christian Barat, Andrew I. Comport, 3D High Dynamic Range Dense Visual SLAM and Its Application to Real-time Object Re-lighting, International Symposium on Mixed and Augmented Reality, Oct 2013, Adelaide, Australia.

Maxime Meilland, Andrew I. Comport, Patrick Rives, Real-time Dense Visual Tracking under Large Lighting Variations, British Machine Vision Conference, Aug 2011, Dundee, Scotland, United Kingdom. pp.45.1-45.11.

Christian Barat, Ronald Phlypo, A Fully Automated Method to Detect and Segment a Manufactured Object in an Underwater Color Image, EURASIP Journal on Advances in Signal Processing, 2010. pp.1-10.

Yang Cong, Changjun Gu, Tao Zhang, Yajun Gao, Underwater robot sensing technology: A survey, Fundamental Research, Volume 1, Issue 3, 2021, Pages 337-345.

Night-to-Day Image Translation for Retrieval-based Localization Asha Anoosheh, Torsten Sattler, Radu Timofte, Marc Pollefeys, Luc van Gool In Arxiv, 2018.