RESEARCH ACTIVITIES

MARINE AND UNDEWATER ROBOTICS

As a member of OSCAR team, my current prioritized research activity concerns Marine and Underwater Robotics. We try to bring our knowledge and techniques in Visual Servo Control, Nonlinear Observer and Nonlinear Control theories (which have been developed for Aerial robotics) to the field of Marine and Underwater Robotics that exhibits new challenges and opportunities. Since 7 years, we have been active in Marine and Underwater Robotics via our involvement in 4 National and European projects. Some visual servoing solutions for Autonomous Underwater Vehicles (such as stabilization in front of a visual target, pipeline following) have been successfully developed and validated via the collaborations with our industrial partners (CYBERNETIX, ALSEAMAR). We have recently developed a man-portable underwater robotic vehicle to facilitate experimental validations of the developed control and estimation algorithms. In particular, a novel homography-based visual servoing algorithm without relying on a costly velocity sensor (e.g. DVL) has been successfully validated with this platform for the station keeping application. These experimental demonstrations of this low-cost solution have attracted a number of specialized companies.

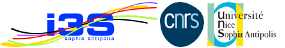

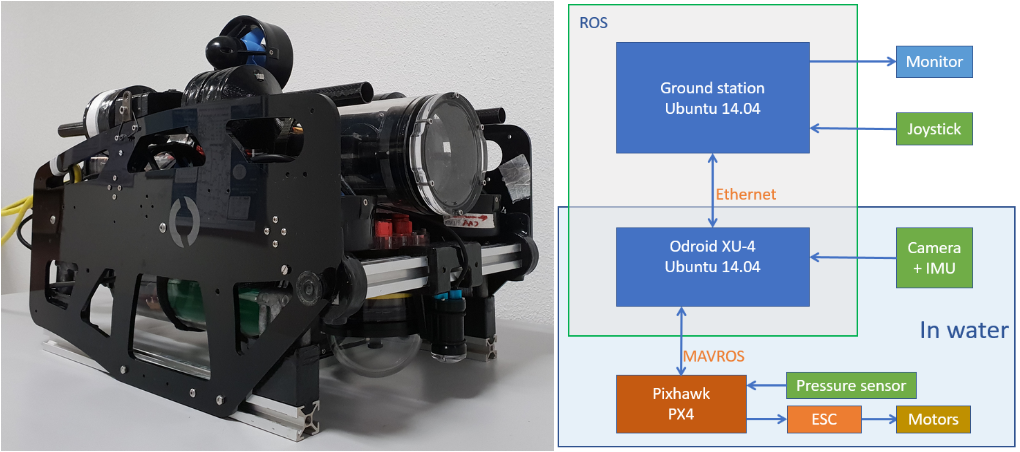

The AUV platform and Hardware/Software architecture developed by I3S-OSCAR team

RESEARCH THEME

Nonlinear Control and Sensor-based Control of Autonomous Underwater Vehicles

Autonomous navigation of AUVs in an unknown or partially known and dynamically changing oceanic environment is challenging. Scientific issues are strongly related to the fact that the AUV may navigate in cluttered areas where global acoustic positioning systems are unusable or insufficiently precise for safe navigation. In this case, the AUV must rely on exteroceptive sensors and sensor-based navigation strategies. Although several types of sensors can be used, the video camera remains an excellent candidate as they are considerably cheaper than acoustic sensors and provide rich information at a high update rate. Among many applications related to vision-based control paradigm, we have investigated the two following relevant control problems of AUVs: Homography-based stabilization and Pipeline following.

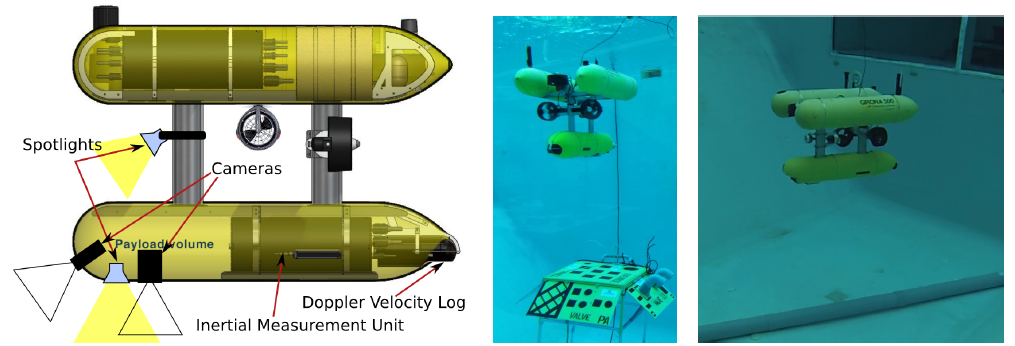

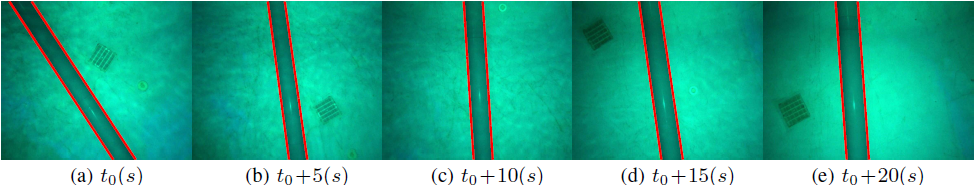

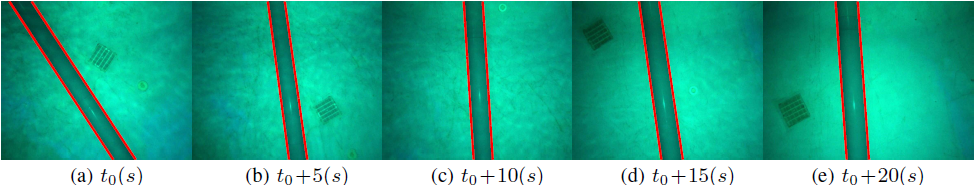

Validation of the proposed HBVS controller using a downward-looking camera

RESEARCH THEME

Visual-Inertial Fusion and Nonlinear Observer design for Homography Estimation

The AUV platform and Hardware/Software architecture developed by I3S-OSCAR team

RESEARCH THEME

Nonlinear Control and Sensor-based Control of Autonomous Underwater Vehicles

Autonomous navigation of AUVs in an unknown or partially known and dynamically changing oceanic environment is challenging. Scientific issues are strongly related to the fact that the AUV may navigate in cluttered areas where global acoustic positioning systems are unusable or insufficiently precise for safe navigation. In this case, the AUV must rely on exteroceptive sensors and sensor-based navigation strategies. Although several types of sensors can be used, the video camera remains an excellent candidate as they are considerably cheaper than acoustic sensors and provide rich information at a high update rate. Among many applications related to vision-based control paradigm, we have investigated the two following relevant control problems of AUVs: Homography-based stabilization and Pipeline following.

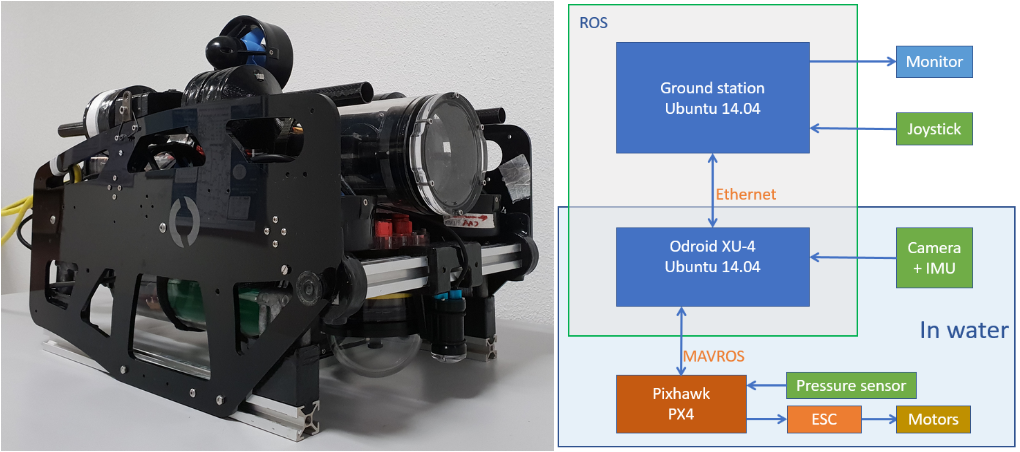

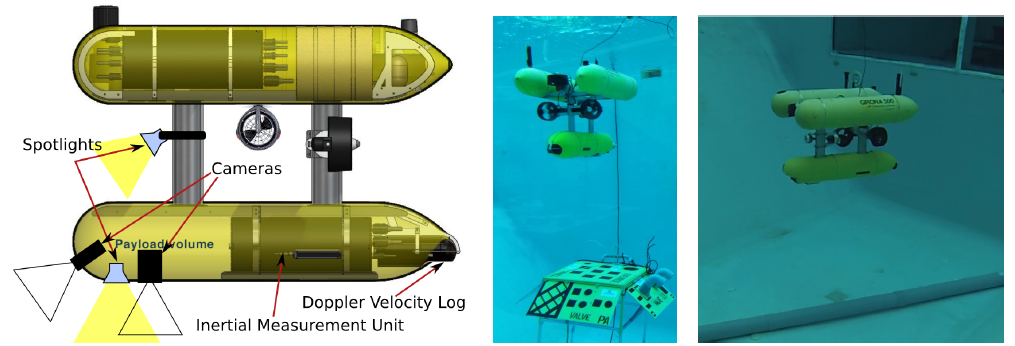

The Girona-500 AUV (left) used by the company Cybernétix for experimental validations of our vision-based controllers for homography-based stabilization and positioning (middle) and for pipeline following (right)

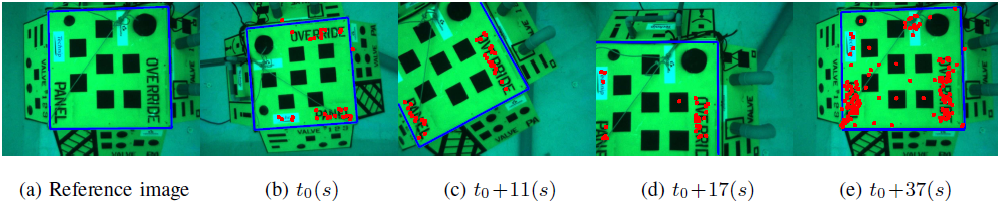

Vision-based stabilization and positioning

This functionality is useful for AUV navigation close to underwater infrastructures in the case when high-resolution imaging is needed for inspection or intervention tasks. In the context of monocular-vision, we have recently proposed a homography-based visual servoing (HBVS) control approach for fully-actuated AUVs, using the homography matrix that encodes transformation information between two images of the same planar target. The proposed control approach has been experimentally validated by my industrial collaborator Cybernétix on the Girona-500 AUV. This work is published in IEEE Transactions on Robotics. An extension of this work to the case of absence of linear velocity measurements (i.e. DVL is not used) has been recently developed and submitted to IEEE Transactions on Control Systems Technology.

Validation of the proposed HBVS controller using a downward-looking camera

Vision-based pipeline following

This problem is highly relevant for inspection of submerged linear infrastructures such as pipelines and cables by AUVs. We have proposed an IBVS controller for fully-actuated AUVs for pipeline/cable following using a monocular camera and using Plücker coordinates for the representation of lines. Experimental validations have been recently carried out by Cybernétix on the Girona-500 AUV. This work is published in Control Engineering Practice.

Validation of the proposed vision-based controller for pipeline following

Related publications

- L.-H. Nguyen, M.-D. Hua, G. Allibert, T. Hamel. A homography-based dynamic control approach applied to station keeping of autonomous underwater vehicles without linear velocity measurements. Submitted to IEEE Transactions on Control Systems Technology, 2019.

- G. Allibert, M.-D. Hua, S. Krupínski, T. Hamel. Pipeline following by visual servoing for Autonomous Underwater Vehicles. Control Engineering Practice, Regular Paper, 82, 151-160, 2019.

- S. Krupínski, G. Allibert, M.-D. Hua, T. Hamel. An inertial-aided homography-based visual servo control approach for (almost) fully-actuated Autonomous Underwater Vehicles. IEEE Transactions on Robotics, Regular Paper, 33 (5), 1041-1060, 2017.

- L.-H. Nguyen, M.-D. Hua, G. Allibert, T. Hamel. Inertial-aided Homography-based Visual Servo Control of Autonomous Underwater Vehicles without Linear Velocity Measurements. In 21st International Conference on System Theory, Control and Computing (ICSTCC), pp. 9-16, 2017.

- S. Krupínski, R. Desouche, N. Palomeras, G. Allibert, M.-D. Hua. Pool testing of AUV visual servoing for autonomous inspection. In IFAC Workshop on Navigation, Guidance and Control of Underwater Vehicles (NGCUV’2015), Vol. 48, No. 2, pp. 274–280, 2015.

- M.-D. Hua, G. Allibert, S. Krupínski, T. Hamel. Homography-based visual servoing for autonomous underwater vehicles. In IFAC World Congress, Vol. 47, No. 3, pp. 5726-5733, 2014.

- S. Krupínski, G. Allibert, M.-D. Hua, T. Hamel. Pipeline tracking for fully-actuated autonomous underwater vehicle using visual servo control. In American Control Conference (ACC), 6196-6202, 2012.

Ongoing projects

ASTRID CONGRE (2019-2021) I'm coordinator of this project involving 3 partners (I3S, LIRMM, ALSEAMAR)The objective of the project is to establish a new control paradigm for underwater vehicles that integrates in a unified and elegant framework the procedures of modeling, control, estimation and optimization. At the heart of this new methodology is the judicious exploitation of the main hydrodynamic forces in control design for a large class of autonomous underwater vehicles typically underactuated, resulting in a simple, but fast and inexpensive approach that improves navigation ability and increases performance and application areas. More specifically, the project aims to propose new nonlinear control and sensor-based control techniques via an adapted modeling procedure that requires simple empirical calculations instead of heavy and expensive identifications. The experiments, however, require implementation of the developed control laws, thus involving the development of nonlinear observers adapted to the tasks assigned to the vehicles such as inspection and surveillance operations. Envisioned sea trials will undoubtedly convince the specialized community. The involvement of ALSEAMAR through their expertise, their means of experimentation and their geographical location (located between the two other project partners -LIRMM and I3S-) is a definite asset that will allow for the development of the project to be coherently shared between theoretical developments (with potential application developments) and technological and applied developments.FUI GreenExplorer (2017-2021)

Past projects

- CNRS PEPS CONGRE (2015-2017) Consortium: ISIR and I3S. I was the project coordinator.

PhD students

- Lam Hung NGUYEN (01/09/2015-now, co-advisor with T. Hamel). Subject: Nonlinear control of Autonomous Underwater Vehicles.

- Szymon KRUPINSKI (2010–2014, co-advisor with T. Hamel and G. Allibert, financed by the company Cybernétix). Subject: Offshore structure following by means of sensor servoing and sensor fusion. Thesis defended in July 2014, with highest honour.

For primary experimental validations (such as vision-based control), we have purchased a BlueROV kit and upgraded it with high level functionalities such as homography estimation and homography-based dynamic positionning.Experimental platform

RESEARCH THEME

Visual-Inertial Fusion and Nonlinear Observer design for Homography Estimation

Originated from the field of Computer Vision, the so-called Homography is an invertible mapping that relates two camera views of the same planar scene. It plays an important role in numerous computer vision and robotic applications where the scenarios involve man-made environments composed of (near) planar surfaces. Classical algorithms for homography estimation taken from the computer vision community consist in computing the homography on a frame-by-frame basis by solving algebraic constraints related to correspondences of image features (points, lines, conics, contours, etc.). These algorithms, however, only consider the homography as an incidental variable and are not focused on improving (or filtering) the homography over time. This yields an obvious interest in developing alternative homography estimation algorithms that exploit the temporal correlation of data across a video sequence rather than computing algebraically individual raw homography for each image. Nonlinear observers provide a relevant answer to that preoccupation.

In our recent works, we have developed nonlinear observers based on the underlying structure of the Special Linear group SL(3), isomorphic to the group of homographies. We have addressed the question of deriving an observer for a sequence of image homographies that takes image point-feature correspondences directly as input. The advantage of this observer compared to prior work of my co-authors is the non-requirement of individual raw image homographies as feedback information. This saves considerable computational resources and makes the proposed algorithm suitable for embedded systems with simple feature tracking software. Recently, we have successfully implemented this algorithm for real-time applications such as image stabilization with a synchronized Camera-IMU. The algorithm has been implemented in C++ with OpenCV library, while the Camera and IMU are synchronized using ROS. An advanced version of this code using CUDA library for GPU implementation is also available. Extensions to taking into account line and conic features are also considered in our recent works on nonlinear observer design on SL(3).

In our recent works, we have developed nonlinear observers based on the underlying structure of the Special Linear group SL(3), isomorphic to the group of homographies. We have addressed the question of deriving an observer for a sequence of image homographies that takes image point-feature correspondences directly as input. The advantage of this observer compared to prior work of my co-authors is the non-requirement of individual raw image homographies as feedback information. This saves considerable computational resources and makes the proposed algorithm suitable for embedded systems with simple feature tracking software. Recently, we have successfully implemented this algorithm for real-time applications such as image stabilization with a synchronized Camera-IMU. The algorithm has been implemented in C++ with OpenCV library, while the Camera and IMU are synchronized using ROS. An advanced version of this code using CUDA library for GPU implementation is also available. Extensions to taking into account line and conic features are also considered in our recent works on nonlinear observer design on SL(3).

Related publications

- M.-D. Hua, J Trumpf, T Hamel, R Mahony, P Morin. Feature-based Recursive Observer Design for Homography Estimation and its Application to Image Stabilization. Asian Journal of Control, Special Issue, Regular Paper, 2019.

- T Hamel, R Mahony, J Trumpf, P Morin, M.-D. Hua. Homography estimation on the Special Linear Group based on direct point correspondence. In IEEE Conference on Decision and Control (CDC), pp. 7902-7908, 2011.

- M.-D. Hua, J Trumpf, T Hamel, R Mahony, P Morin. Point and line feature-based observer design on SL(3) for Homography estimation and its application to image stabilization. Provisionally accepted for Automatica, 2019.

- M.-D. Hua, T Hamel, R Mahony, G Allibert. Explicit Complementary Observer Design on Special Linear Group SL(3) for Homography Estimation using Conic Correspondences. In IEEE Conference on Decision and Control (CDC), pp. 2434-2441, 2017.

- N. Manerikar, M.-D. Hua, T. Hamel. Homography Observer Design on Special Lineal Group SL(3) with Application to Optical Flow Estimation. In European Control Conference (ECC), 1602-1606, 2018.

- S. De Marco, M.-D. Hua, R. Mahony, T. Hamel. Homography estimation of a moving planar scene from direct point correspondence. In IEEE Conference on Decision and Control (CDC), 2018.