Frugal Artificial Intelligence for Direct Visual SLAM:

Real-Time Computation and Spatially-Aware Memory with Lifelong Learning and Predictive Video

Contact : This email address is being protected from spambots. You need JavaScript enabled to view it. and This email address is being protected from spambots. You need JavaScript enabled to view it.

Keywords : Visual SLAM, Computer Vision, AI, Robotics

Student profil and experience

The student will be expected to have strong theoretical and applied background in computer vision, machine learning, visual servoing and robotics . English writing skills will be of particular value

Research team:

The Ph.D. student activities will take place in the Robot Vision group (http://www.i3s.unice.fr/robotvision/) at the I3S-CNRS laboratory of the University of Cote d'Azur and the University of Melbourne under the supervision of Dr. Andrew Comport (CNRS).and Professor Tom Drummond (University of Melbourne)

Starting date : 01/10/2023 – 01/12/2023

Funding Duration : 36 months (3 years)

|

Abstract

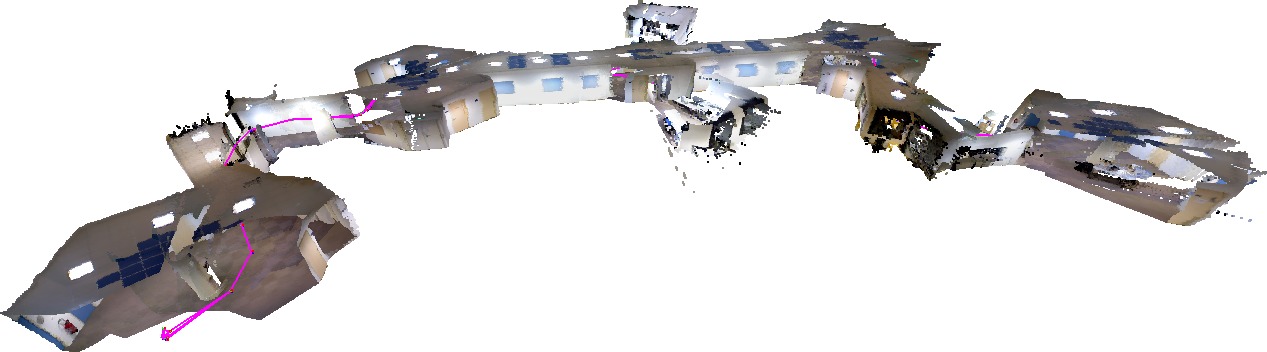

This research project, conducted as a joint PhD program between the University of Melbourne and the CNRS at the University Cote d'Azur, focuses on frugal artificial intelligence (AI) techniques for direct visual simultaneous localization and mapping (SLAM). The objective is to develop real-time computational algorithms that enable efficient and accurate direct visual SLAM, while also incorporating lifelong learning and predictive video capabilities. Additionally, the project aims to maintain a spatially-aware memory for effective knowledge sharing among agents, facilitated by a high-level knowledge graph using a sequential story-telling process.

The proposed research will investigate the following objectives:

- Development of frugal AI algorithms for direct visual SLAM: The project will investigate novel approaches to design efficient AI algorithms that enable direct visual SLAM in real-time. These algorithms will leverage frugality principles to ensure optimal resource utilization while maintaining high accuracy and reliability.

- Integration of lifelong learning and predictive video in direct visual SLAM: The research will focus on incorporating lifelong learning techniques into the direct visual SLAM framework, enabling the system to adapt and improve over time. Additionally, predictive video capabilities will be integrated to enhance the system's ability to anticipate and make informed decisions based on future video frames.

- Implementation of a spatially-aware memory for knowledge sharing: The project aims to develop a spatially-aware memory that can be shared among multiple agents, enabling effective collaboration and information exchange. This memory will capture the spatial relationships between objects and agents, facilitating efficient communication through a high-level knowledge graph.

- Utilization of a high-level knowledge graph for sequential story-telling: The research will develop a high-level knowledge graph that represents the sequential relationships between events and actions. This graph will enable agents to share knowledge effectively, facilitating coherent and coordinated interactions based on the story-telling process.

The proposed research will contribute to the development of frugal AI algorithms that can operate in resource-constrained environments while enabling lifelong learning and predictive video. The research will also contribute to the development of a spatially aware memory that can facilitate effective collaboration among agents. The findings of this research will have applications in a wide range of domains, including robotics, augmented reality, and autonomous vehicles.

Context

This research project will be part of a joint PhD program between the University of Melbourne in Australia and the CNRS (Centre National de la Recherche Scientifique) at the University Cote d'Azur in France. This joint PhD program aims to bring together the expertise and resources of both institutions to provide a unique and diverse research environment for the student.

This research project will be supervised by Tom Drummond from the University of Melbourne in Australia and Andrew Comport from the CNRS (Centre National de la Recherche Scientifique) at the University Cote d'Azur in France. As joint supervisors, they will provide the student with their expertise and guidance throughout the project.

Tom Drummond is a Professor of Engineering at the University of Melbourne, and an internationally recognized expert in computer vision and robotics. He has a strong record of research in the field of visual perception and has made significant contributions to the development of visual tracking and mapping algorithms. He will bring his extensive knowledge and experience to the project, and provide valuable insights into frugal AI algorithms for lifelong learning and predictive video.

Andrew Comport is a Senior Researcher at the CNRS and head of the Robot Vision team at the University Cote d'Azur. He is a renowned expert in real-time visual localisation and mapping in robotics. His research has contributed to the development of visual perception and control techniques for robotics, as well as multi-camera systems and real-time image processing algorithms. He will provide the student with his expertise in the development of spatially aware memory, predictive video and sequential story-telling processes.

As supervisors from two leading research institutions, Tom Drummond and Andrew Comport will provide the student with a unique and diverse research environment.Overall, the joint PhD program between the University of Melbourne and CNRS at University Cote d'Azur provides a unique and enriching experience for the student, enabling them to undertake cutting-edge research in a collaborative and interdisciplinary environment.

This project is of great interest to our current industrial and academic partners, particularly in underwater robotics and autonomous driving. There will be opportunities to apply this work to these application domains and build a relationship with these partners.

Références bibliographiques

Forecasting of Depth and Ego-Motion with Transformers and Self-supervision, Houssem Eddine Boulahbal, Adrian Voicila, Andrew I. Comport, 26TH International Conference on Pattern Recognition, Aug 2022, Montreal, Canada

Instance-Aware Multi-Object Self-Supervision for Monocular Depth Prediction, Houssem Eddine Boulahbal, Adrian Voicila, Andrew I. Comport, IEEE Robotics and Automation Letters, 2022, 7 (4), pp.10962-10968

Meyer, Benjamin J. et al. “Deep Metric Learning and Image Classification with Nearest Neighbour Gaussian Kernels.” 2018 25th IEEE International Conference on Image Processing (ICIP) (2017): 151-155.

Meyer, Benjamin J. and Tom Drummond. “The Importance of Metric Learning for Robotic Vision: Open Set Recognition and Active Learning.” 2019 International Conference on Robotics and Automation (ICRA) (2019): 2924-2931.

Guerra, Luis, Bohan Zhuang, Ian D. Reid and Tom Drummond. “Automatic Pruning for Quantized Neural Networks.” 2021 Digital Image Computing: Techniques and Applications (DICTA) (2020): 01-08.

Guerra, Luis, Bohan Zhuang, Ian D. Reid and Tom Drummond. “Switchable Precision Neural Networks.” ArXiv abs/2002.02815 (2020)

Guerra, L., Zhuang, B., Reid, I.D., & Drummond, T. (2020). Switchable Precision Neural Networks. ArXiv, abs/2002.02815.